IEF - Impact Evaluation Framework

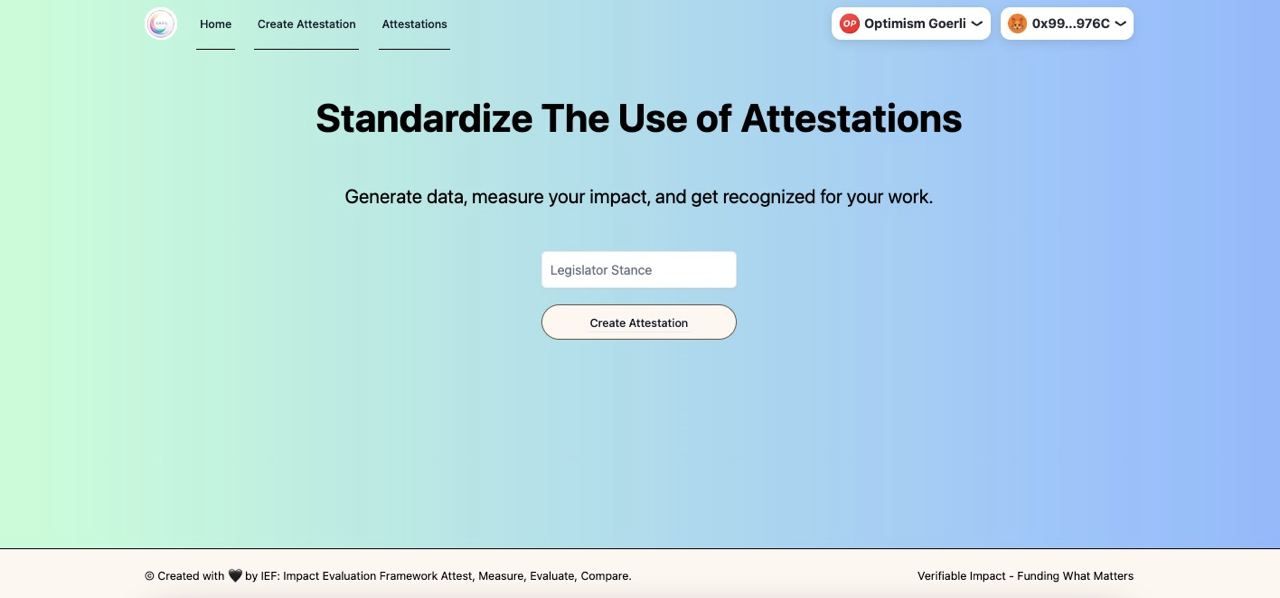

Standardization of Impact Measurement of public goods projects through attestations.

Project Description

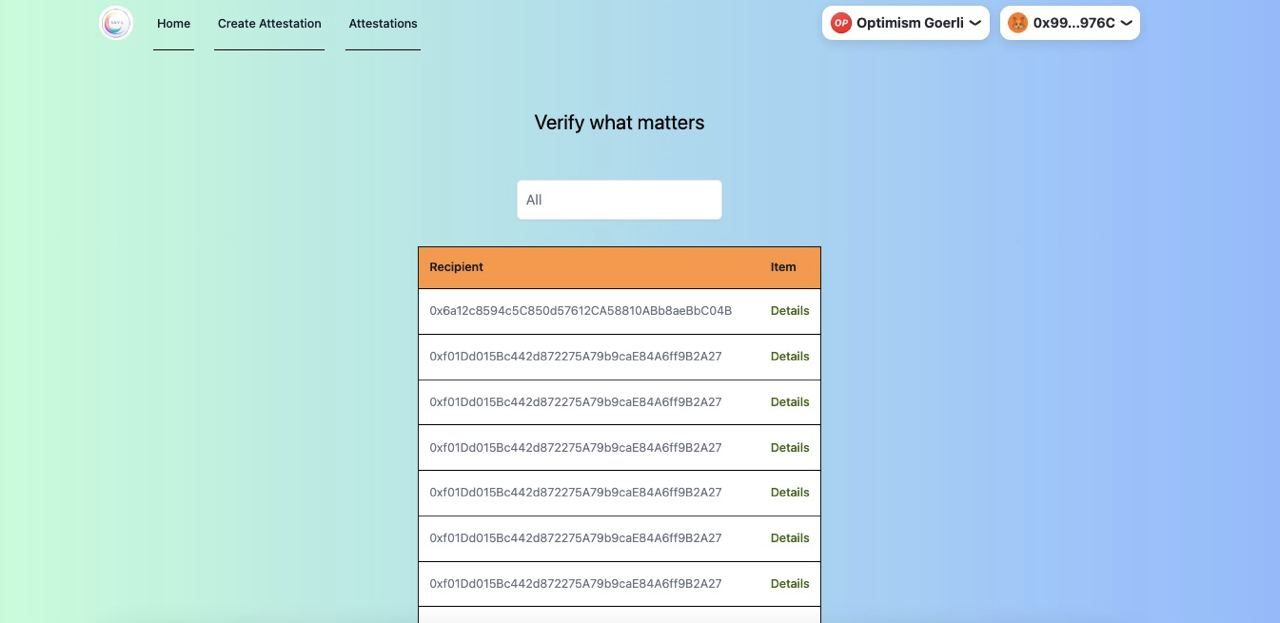

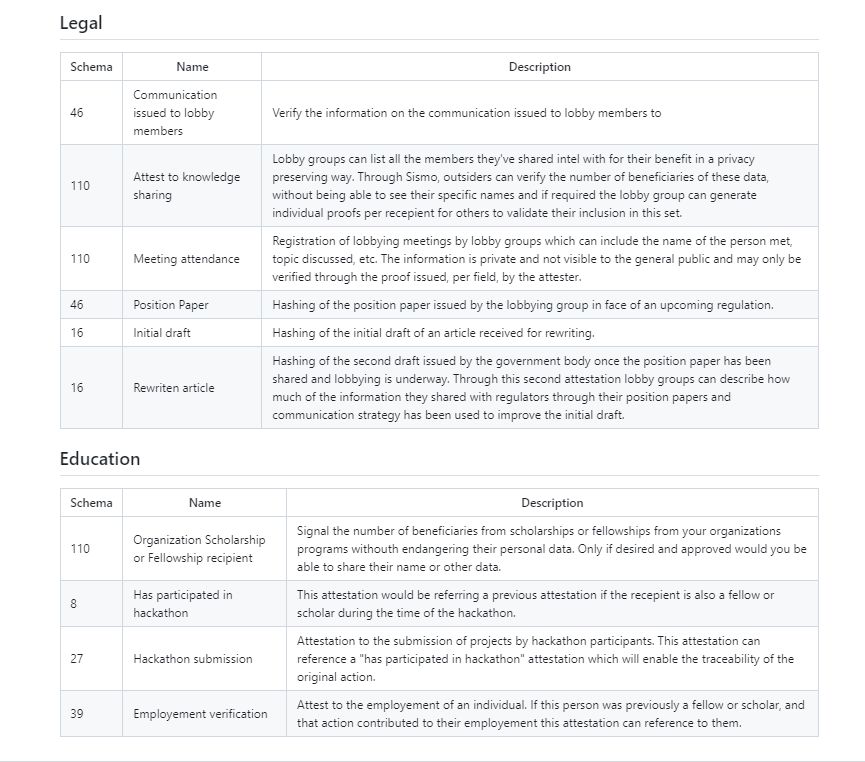

Leveraging EAS and Sismo, IEF proposes an opt-in standardised series of attestations that organisations can use to measure comparable data points to track their impact in the web3 ecosystem. By generating data on the actions being carried out, projects are able to trace their work on-chain and have funders verify their impact and reward them for their work and contributions to the web3 ecosystem.

Measuring the impact generated by public goods projects in web3 is hard. This obstacle was evident during Optimism's RetroPGF Round2, where measuring impact was a challenge both to Nominees (who didn't know how to measure their impact for others to see) and for badgeholders (who didn't know how to assess the impact a project had).

Why is this a problem? An innability to measure the impact a public goods project creates ripple effects that harm the Ethereum Ecosystem ability to thrive in the future by:

Underfunding Public Goods: Projects that are unable to measure and communicate the impact of the work they have done will leave money on the table that badgeholders would have been willing to commit given the right information. Taken to the extreme, if not enough resources are allocated to a project, the public good being provided by it may cease to exist.

Top-down incomplete evaluations: In the second round of RetroPGF, badgeholders were given limited guidance on how to assess the impact of the proposed projects. This presented a dual-edged problem.

On one hand, the badgeholders, responsible for evaluating the projects, encountered difficulties when they had to assess projects in fields that were outside of their areas of expertise or familiarity. Without sufficient resources or context-specific knowledge, their ability to thoroughly evaluate the effectiveness and potential impact of these projects was impaired.

On the other hand, and perhaps more critically, the nominees who proposed these projects faced top-down evaluations that might not have taken into account Key Performance Indicators (KPIs) significant to their on-the-ground operations. This issue stemmed from a potential disconnect between the evaluators' perspectives and the realities of hands-on fieldwork.

There are specific indicators and factors that are vitally important and unique to ground-level work, which might not be visible, known, or may even be dismissed as irrelevant from the standpoint of someone who hasn't been involved in such in-situ operations. Consequently, these evaluations might have overlooked some crucial aspects of the projects, thereby affecting the comprehensiveness and accuracy of the assessments.

Inability to scale: During the RetroPGF process, the limited amount of information supplied to badgeholders posed a significant challenge. It necessitated an increased commitment of time as badgeholders needed to liaise with their counterparts to determine an effective approach for assessing the impact of projects. They were compelled to sift through the provided information, identify any gaps in data, and deliberate over which metrics should be the determining factors in the allocation of votes.

This process was time-consuming and could potentially become unmanageable as the number of projects involved in RetroPGF grows. The design and expectation of RetroPGF is to see an increase in participating projects. However, with the current system, it may result in an overwhelming workload for badgeholders. They may find it increasingly challenging to thoroughly review each project and thoughtfully distribute their votes due to time constraints.

As it stands, without more comprehensive guidelines and support, the expanding scope of RetroPGF might exceed the badgeholders' capacity to perform careful, conscientious evaluations, compromising the effectiveness and fairness of the entire process. There is an urgent need to streamline and enhance the evaluation framework to ensure its scalability and efficacy as the initiative grows.

How it's Made

Simple, but currently missing foundation.

IEF proposes a 3-legged approach to address the challenge in measuring the impact of public goods projects applying for Public Goods funding. This process is only possible with the creation, and upkeep of an Impact Evaluation Framework leveraging attestations. To ensure that data points and impact measurements are comparable among impact generators we leveraged EAS. A standard on its own to attest to a vast a ray of events, things, and more off-and on-chain.

EAS is the foundation of this project as it enables the generation of data points impact generators can reference and Public Goods funders can verify. While organisations currently use substitutes such as POAP, EAS permissionless, decentralized and versatile issuance is key for widespread adoption. EAS is also the only existing technology that can enable the creation of new data points and KPIs as needed by self reported impact generators.

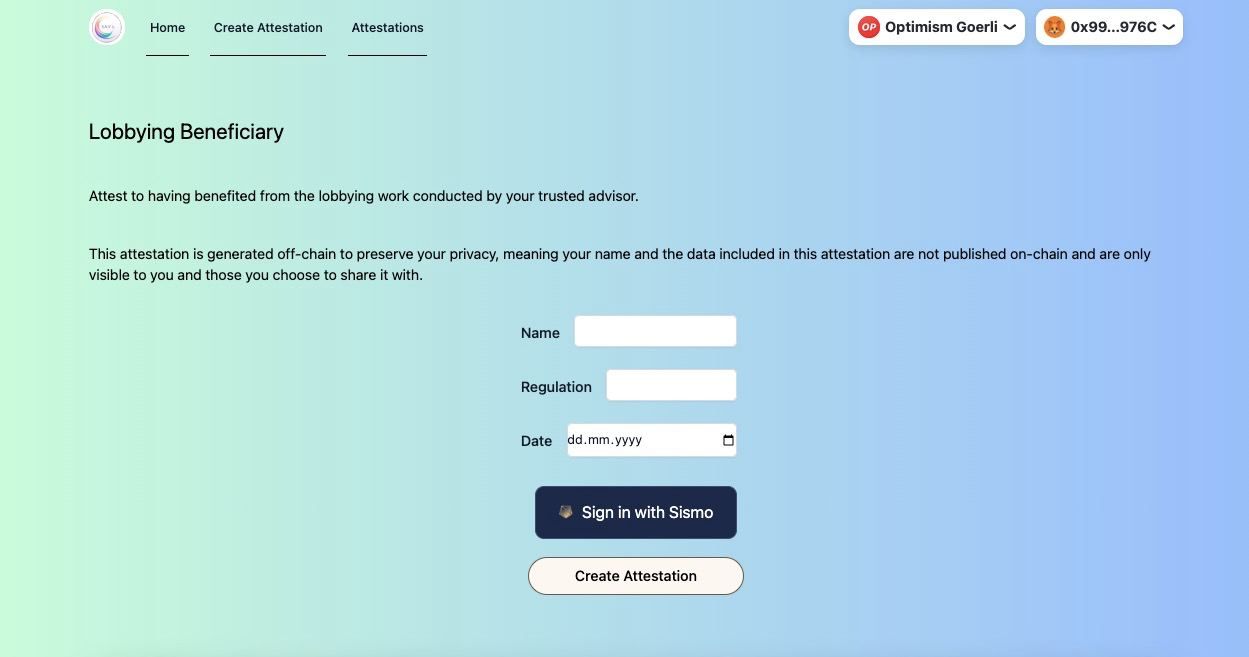

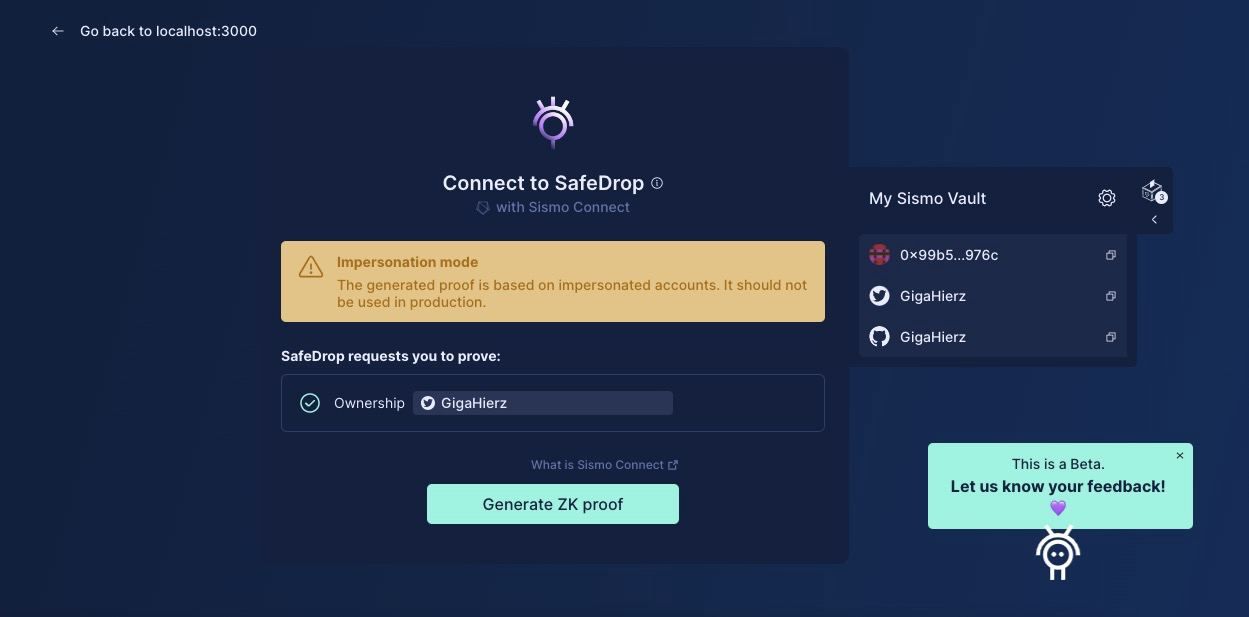

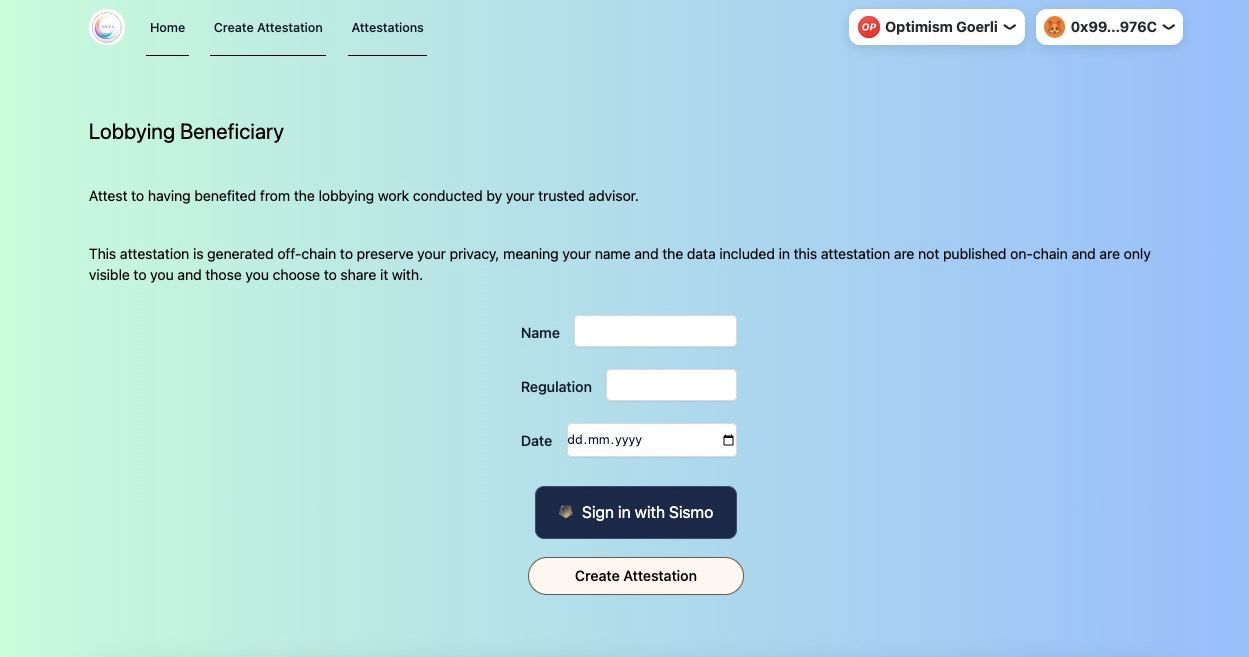

To avoid sybil attacks from polluting the data generated through the issuance of attestations, we have incorporated Sismo as a Sign in requirement prior to the creation of certain attestations that are issued by individuals to organisations. The example shown in our demo is that of the beneficiary of a crypto lobbying group. Without the inclusion of a validation through Sismo, IEF would be vulnerable to sybil attacks making it easy for malicious actors to game the system and bias the data generate so as to take advantage of public goods funding mechanisms.

While we were unable to use the Celo blockchain because the smart contracts for EAS have not been deployed on it, we leveraged their launch starterkit to produce a smooth looking front-end for our project which highly reduced our development time.