IPFS FAISS KNN INDEX

KNN index dataset search, showing how items can be searched without a traditional search engine.

Project Description

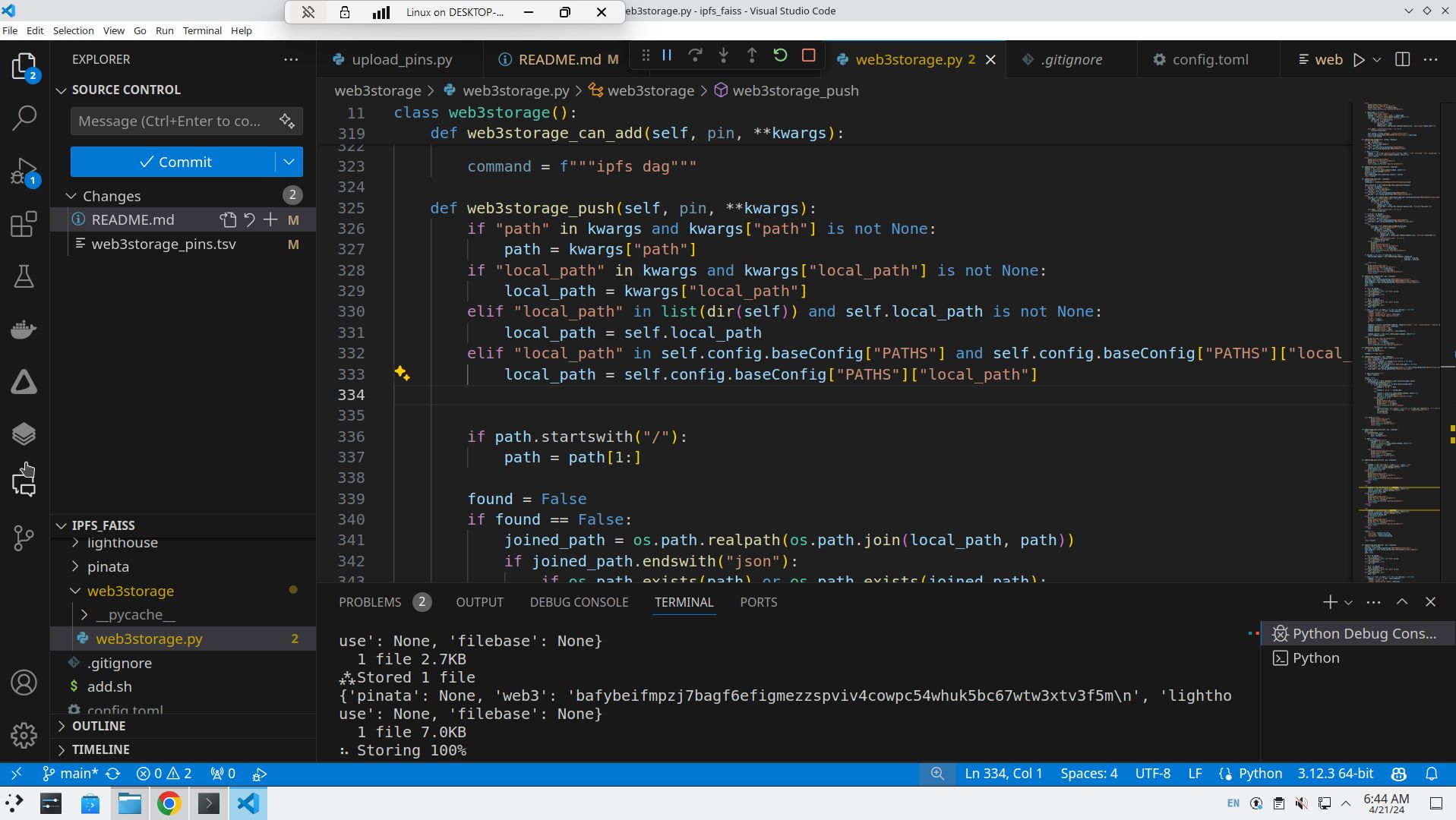

Description Hackathon Repo: https://github.com/endomorphosis/ipfs_faiss

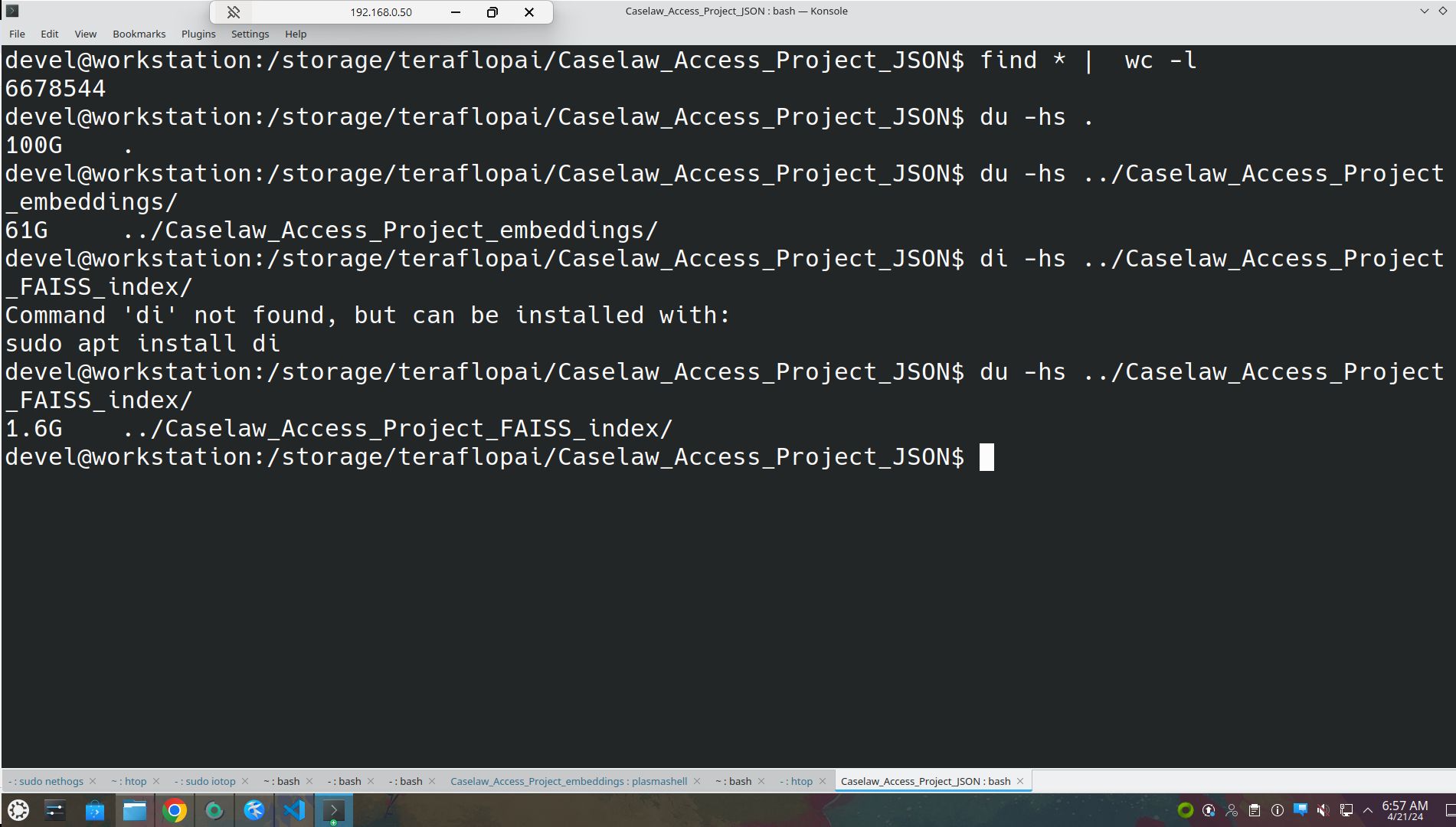

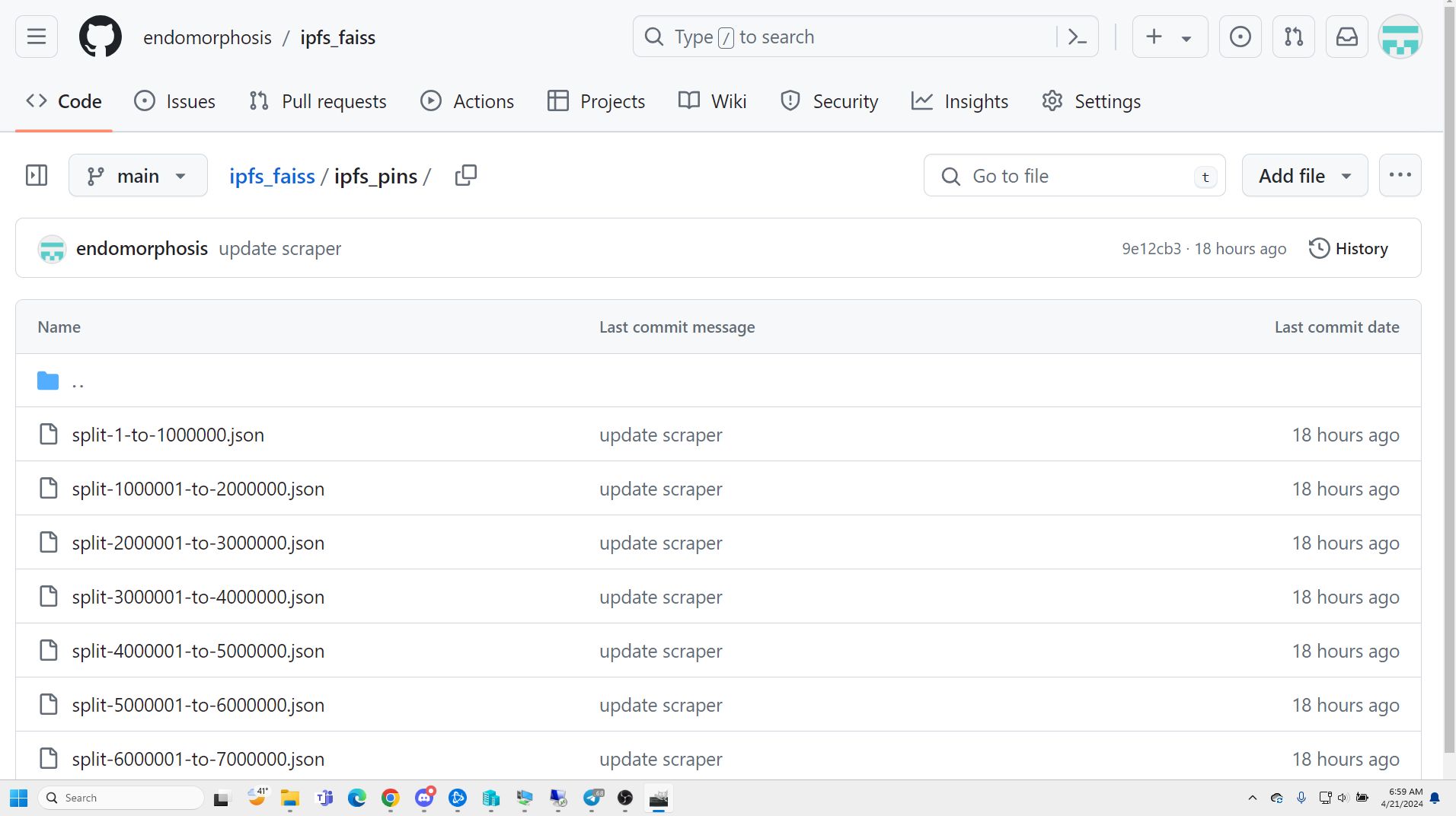

This repository includes an index of all US caselaw, which has been hosted on the IPFS network through web3storage, and is otherwise available on huggingface as well located here: https://huggingface.co/datasets/endomorphosis/Caselaw_Access_Project_JSON

These documents can be searched through using K nearest neighbors, however there are approximately 7,000,000 documents indexed here, so it requires considerable compute to search through the entire index at once.

This will need to be parallelized by sharding up the number of indexes and running queries in parallel, and that is eventually going to be a todo item.

The Embeddings Indexes can be found here https://huggingface.co/datasets/TeraflopAI/Caselaw_Access_Project_embeddings https://huggingface.co/datasets/TeraflopAI/Caselaw_Access_Project_FAISS_index

Introduction: I initially started this, because I was intending on using the IPFS models for my MLops model delivery, but I started to consider after talking to Yannic Kilcher CEO of Deep Judge, regarding the UX of training models and fetching data from huggingface, that having multiple workers simultaneously all pulling data from huggingface was bad for network congestion, and decided to try to modify the huggingface libraries to fetch models from IPFS, which might include a neighboring node in the same datacenter or a pinning service, or from S3 when there is no pin available, or from HTTPS when neither are available. For those who do not know, machine learning systems need to optimize for latency and bandwidth, in order to keep the GPU/TPU/NPU utilization high. This is why for example instead of a hub and spoke network model, Nvidia and Google use some variant of ring networking called a toroidal network, in order to avoid the bottlenecks in a traditional network layout. This means that each accelerator is directly sharing data with its neighboring accelerators, instead of relying on a centralized router, because if you double your number of accelerators you will double your network throughput per node, rather than halving it with a traditional hub and spoke network layout.

Subsequently the US government is proposing to classify open source models as 'dual use' export controlled, in addition to KYC and deplatforming for people who are 'red flagged'. I have been working on some code to scrape huggingface and serve models on IPFS, mainly out of concerns that a 3d avatar legal secretary will get attacked or shut down by malefactors, because it will be assisting people in their lawsuits against the government, many of whom are being held in jail without a public defender because there are simply none available. However with the new regulations it appears that huggingface will be under pressure to comply with the ITAR regulations, and close access to any machine learning model with more than 10 billion parameters, and will face jail time if they do not comply, so I am creating this to maintain access to ML models even if they are censored. While I certainly think that this regulation is a classic "prior restraint on speech", that will likely take several years to work its way through the courts, and in the meanwhile there needs to be a stopgap measure to mitigate the supply chain risk.

This library acts as a wrapper which adds a static methods .from_auto_download and .from_ipfs to the transformers library, but the repository also contains a scraper for huggingface with a CLI, some model conversion utilities, and a website to easily browse what models are available in the collection. The ipfs_kit library support a wrapper for ipfs_daemon, ipfs_cluster_service and ipfs_cluster_follow, and changing self.role from leecher to worker or master should install / configure it.

Sources: JusticeDAO pitch deck

https://www.federalregister.gov/documents/2024/01/29/2024-01580/taking-additional-steps-to-address-the-national-emergency-with-respect-to-significant-malicious

https://www.regulations.gov/document/NTIA-2023-0009-0001

https://venturebeat.com/ai/nist-staffers-revolt-against-potential-appointment-of-effective-altruist-ai-researcher-to-us-ai-safety-institute/

https://yro.slashdot.org/story/24/03/11/185217/us-must-move-decisively-to-avert-extinction-level-threat-from-ai-govt-commissioned-report-says

https://www.opb.org/article/2022/01/20/american-bar-association-finds-oregon-has-just-13-of-needed-public-defenders/

How it's Made

python:

huggingface web3storage alot of lines of code

i also broke down the monorepo into smaller repos there is also a huggingface scraper available in other repos

related Repos: https://github.com/endomorphosis/ipfs_transformers

https://github.com/endomorphosis/ipfs_transformers_js

https://github.com/endomorphosis/ipfs_datasets

https://github.com/endomorphosis/orbitdb-kit/

this code imports an ipfs_model_manager, which helps allieviate bandwidth bottleneck by always downloading the model from the fastest source whether it be ipfs / https / s3, and does not suffer from congestion like a traditional hub and spoke based network